目录

- 1. HBase Java API

- 1.1 Admin

- 1.2 TableDescriptor

- 1.3 HBaseConfiguration

- 1.4 Table

- 1.5 Put

- 1.6 Get

- 1.7 Delete

- 1.8 Result

- 2. HBase Java API示例

- 2.1 pom.xml中依赖配置

- 2.2 工具类Util

- 2.3 创建表格Creation

- 2.4 插入数据Insertion

- 2.5 获取数据Selection

- 2.6 删除数据Deletion

- 2.7 HBase Java API代码

- 2.8 结果

- 参考

1. HBase Java API

1.1 Admin

Admin是一个操作HBase非常重要的类,用于管理表,这个类属于org.apache.hadoop.hbase.client。使用这个类,可以执行管理员任务。使用Connection.getAdmin()方法来获取管理员的实例。

| Admin中部分方法 | 功能 |

|---|---|

void createTable(TableDescriptor desc) | 创建一个新的表 |

void disableTable(TableName tableName) | 禁用表 |

void deleteTable(TableName tableName) | 删除表 |

1.2 TableDescriptor

TableDescriptor包含了一个HBase表的结构信息。org.apache.hadoop.hbase.client.TableDescriptorBuilder提供了更简便的方式来创建TableDescriptor对象。

| TableDescriptor中部分方法 | 功能 |

|---|---|

TableDescriptorBuilder newBuilder(final TableName name) | 构造一个指定TableName对象的表描述符 |

TableDescriptorBuilder setColumnFamily(final ColumnFamilyDescriptor family) | 在表描述符中设置列族描述符 |

1.3 HBaseConfiguration

添加HBase的配置到配置文件,这个类属于org.apache.hadoop.hbase.client。

| 方法 | 功能 |

|---|---|

Configuration create() | 创建使用HBase的资源配置 |

1.4 Table

Table是HBase表中HBase的内部类,用于实现单个HBase表的通信,这个类属于org.apache.hadoop.hbase.client。

| Table中部分方法 | 功能 |

|---|---|

void close() | 释放Table的所有资源 |

void delete(Delete delete) | 删除指定的单元格/行 |

Result get(Get get) | 检索指定的单元格/行 |

void put(Put put) | 将数据插入表中 |

1.5 Put

此类用于为单个行执行Put操作,这个类属于org.apache.hadoop.hbase.client。

| Put中部分方法 | 功能 |

|---|---|

Put(byte[] row) | 创建一个指定行键的Put操作 |

Put addColumn(byte[] family, byte[] qualifier, byte[] value) | 添加指定的列和值到Put |

1.6 Get

此类用于对单行执行Get操作,这个类属于org.apache.hadoop.hbase.client。

| Get中部分方法 | 功能 |

|---|---|

Get(byte[] row) | 为指定行键创建一个Get操作 |

Get addFamily(byte[] family) | 检索来自特定列族的所有列 |

1.7 Delete

这个类用于对单行执行删除操作,这个类属于org.apache.hadoop.hbase.client。

| Delete中部分方法 | 功能 |

|---|---|

Delete(byte[] row) | 创建一个指定行键的Delete操作 |

Delete addFamily(final byte[] family) | 删除指定列族中所有列的所有版本 |

Delete addColumn(final byte[] family, final byte[] qualifier) | 删除指定列的最新版本 |

1.8 Result

这个类是用来获取Get或扫描查询的单行结果。

| Result中部分方法 | 功能 |

|---|---|

byte[] getValue(byte[] family, byte[] qualifier) | 获取指定列的最新版本 |

2. HBase Java API示例

2.1 pom.xml中依赖配置

<dependencies><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>4.11</version><scope>test</scope></dependency><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-common</artifactId><version>2.5.10</version></dependency><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-client</artifactId><version>2.5.10</version></dependency><dependency><groupId>org.apache.hbase</groupId><artifactId>hbase-server</artifactId><version>2.5.10</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-common</artifactId><version>3.3.6</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-auth</artifactId><version>3.3.6</version></dependency></dependencies>

2.2 工具类Util

Util负责获取和关闭与HBase通信的连接。

import java.io.IOException;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;public class Util {public static Connection getConnection() throws IOException {Configuration conf = HBaseConfiguration.create();Connection conn = ConnectionFactory.createConnection(conf);return conn;}public static void closeConnection(Connection conn) throws IOException {conn.close();}

}

2.3 创建表格Creation

Creation负责创建HBase中的表格,其中的void create(Connection conn, String tableName, String[] columnFamilies)支持在建表的同时建立1-3个列族。

import java.io.IOException;import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.ColumnFamilyDescriptor;

import org.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.TableDescriptorBuilder;public class Creation {public static void create(Connection conn, String tableName, String[] columnFamilies) throws IOException {if (columnFamilies.length == 0) {System.out.println("please provide at least one column family.");return;}if (columnFamilies.length > 3) {System.out.println("please reduce the number of column families.");return;}Admin admin = conn.getAdmin();TableName tableName2 = TableName.valueOf(tableName);if (admin.tableExists(tableName2)) {System.out.println("table exists!");return;}TableDescriptorBuilder tableDescBuilder = TableDescriptorBuilder.newBuilder(tableName2);for (String cf : columnFamilies) {ColumnFamilyDescriptor colFamilyDesc = ColumnFamilyDescriptorBuilder.of(cf);tableDescBuilder.setColumnFamily(colFamilyDesc);}admin.createTable(tableDescBuilder.build());System.out.println("create table success!");admin.close();}

}

2.4 插入数据Insertion

Insertion负责插入数据,方法的功能介绍如下。

| 方法 | 功能 |

|---|---|

void put(Connection conn, String tableName, String row, String family, String column, String data) | 对已有的HBase表中指定列插入数据 |

void put(Connection conn, String tableName, String row, String family, String[] columns, String[] datas) | 对已有的HBase表中指定列族(该列族下有很多列)插入数据 |

import java.io.IOException;import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;public class Insertion {public static void put(Connection conn, String tableName, String row, String family, String column, String data)throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Put p1 = new Put(Bytes.toBytes(row));p1.addColumn(Bytes.toBytes(family), Bytes.toBytes(column), Bytes.toBytes(data));table.put(p1);System.out.println(String.format("put '%s', '%s', '%s:%s', '%s'", tableName, row, family, column, data));}public static void put(Connection conn, String tableName, String row, String family, String[] columns,String[] datas)throws IOException {if (columns.length != datas.length) {System.out.println("the length of columns must equal the length of datas.");return;}for (int i = 0; i < columns.length; i++) {put(conn, tableName, row, family, columns[i], datas[i]);}}

}

2.5 获取数据Selection

Selection负责获取数据,方法的功能介绍如下。

| 方法 | 功能 |

|---|---|

get(Connection conn, String tableName, String row) | 打印指定表中指定行键的记录 |

get(Connection conn, String tableName, String row, String family) | 打印指定表中指定列族的记录 |

get(Connection conn, String tableName, String row, String family, String qualifier) | 打印指定表中指定列族下指定列的记录 |

scan(Connection conn, String tableName) | 打印指定表的记录 |

import java.io.IOException;import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;public class Selection {public static void get(Connection conn, String tableName, String row) throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Get g1 = new Get(Bytes.toBytes(row));Result res = table.get(g1);for (Cell cell : res.listCells()) {String family2 = Bytes.toString(CellUtil.cloneFamily(cell));String column2 = Bytes.toString(CellUtil.cloneQualifier(cell));String data = Bytes.toString(CellUtil.cloneValue(cell));System.out.println(String.format("get '%s', '%s': %s:%s: %s", tableName, row, family2, column2, data));}System.out.println();}public static void get(Connection conn, String tableName, String row, String family) throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Get g1 = new Get(Bytes.toBytes(row));g1.addFamily(Bytes.toBytes(family));Result res = table.get(g1);for (Cell cell : res.listCells()) {String column = Bytes.toString(CellUtil.cloneQualifier(cell));String data = Bytes.toString(CellUtil.cloneValue(cell));System.out.println(String.format("get '%s', '%s', '%s': %s: %s", tableName, row, family, column, data));}System.out.println();}public static void get(Connection conn, String tableName, String row, String family, String qualifier)throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Get g1 = new Get(Bytes.toBytes(row));Result res = table.get(g1);System.out.println(String.format("get '%s', '%s', '%s:%s': %s\n", tableName, row, family, qualifier,new String(res.getValue(family.getBytes(), qualifier.getBytes()))));}public static void scan(Connection conn, String tableName) throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Scan scan = new Scan();ResultScanner scanner = table.getScanner(scan);System.out.println("scan: ");for (Result res = scanner.next(); res != null; res = scanner.next()) {for (Cell cell : res.listCells()) {String row = Bytes.toString(CellUtil.cloneRow(cell));String columnFamily = Bytes.toString(CellUtil.cloneFamily(cell));String column = Bytes.toString(CellUtil.cloneQualifier(cell));String data = Bytes.toString(CellUtil.cloneValue(cell));System.out.println(String.format("row: %s, family: %s, column; %s, data: %s", row, columnFamily,column, data));}}scanner.close();}

}

2.6 删除数据Deletion

Deletion负责删除数据,方法的功能介绍如下。

| 方法 | 功能 |

|---|---|

void delete(Connection conn, String tableName) | 删除指定表 |

void delete(Connection conn, String tableName, String row) | 删除指定表中指定行键的记录 |

void delete(Connection conn, String tableName, String row, String family) | 删除指定表中指定列族的记录 |

void delete(Connection conn, String tableName, String row, String family, String column) | 删除指定表中指定列最新的记录 |

void delete(Connection conn, String tableName, String row, String family, String[] columns) | 删除指定表中指定列族下多个列最新的记录 |

import java.io.IOException;import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;public class Deletion {public static void delete(Connection conn, String tableName) throws IOException {Admin admin = conn.getAdmin();TableName tableName2 = TableName.valueOf(tableName);if (admin.tableExists(tableName2)) {try {admin.disableTable(tableName2);admin.deleteTable(tableName2);} catch (IOException e) {e.printStackTrace();System.out.println("the deletion of " + tableName + " fails");admin.close();}System.out.println("the deletion of " + tableName + " succeeds");}admin.close();}public static void delete(Connection conn, String tableName, String row) throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Delete d1 = new Delete(Bytes.toBytes(row));table.delete(d1);}public static void delete(Connection conn, String tableName, String row, String family) throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Delete d1 = new Delete(Bytes.toBytes(row));d1.addFamily(Bytes.toBytes(family));table.delete(d1);}public static void delete(Connection conn, String tableName, String row, String family, String column)throws IOException {Table table = conn.getTable(TableName.valueOf(tableName));Delete d1 = new Delete(Bytes.toBytes(row));d1.addColumn(Bytes.toBytes(family), Bytes.toBytes(column));table.delete(d1);}public static void delete(Connection conn, String tableName, String row, String family, String[] columns) throws IOException {for (String col : columns) {delete(conn, tableName, row, family, col);}}

}

2.7 HBase Java API代码

这段代码通过HBase Java API创建如下的表格:

| name | grade | course | ||

|---|---|---|---|---|

| chinese | math | english | ||

| xiapi | 1 | 97 | 128 | 85 |

| xiaoxue | 2 | 90 | 120 | 90 |

| liming | 3 | |||

import org.apache.hadoop.hbase.client.Connection;public class App {public static void main(String[] args) throws Exception {Connection conn = Util.getConnection();String[] col1 = { "chinese", "math", "english" };Deletion.delete(conn, "scores");Creation.create(conn, "scores", new String[] { "grade", "course" });Insertion.put(conn, "scores", "liming", "grade", "", "3");Insertion.put(conn, "scores", "xiapi", "grade", "", "1");Insertion.put(conn, "scores", "xiapi", "course", col1, new String[] { "97", "128", "85" });Insertion.put(conn, "scores", "xiaoxue", "grade", "", "2");Insertion.put(conn, "scores", "xiaoxue", "course", col1, new String[] { "90", "120", "90" });Selection.get(conn, "scores", "xiaoxue", "grade");Selection.get(conn, "scores", "xiaoxue", "course");Selection.get(conn, "scores", "xiapi", "course", "math");Selection.get(conn, "scores", "xiapi");Selection.scan(conn, "scores");Deletion.delete(conn, "scores", "xiapi", "grade");Deletion.delete(conn, "scores", "xiapi", "course", "math");Deletion.delete(conn, "scores", "xiaoxue", "course");Deletion.delete(conn, "scores", "liming");Selection.scan(conn, "scores");Deletion.delete(conn, "scores");Util.closeConnection(conn);}

}

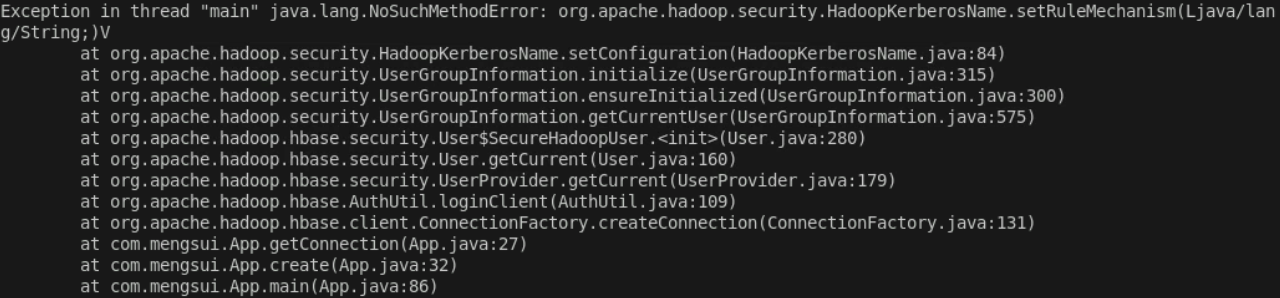

2.8 结果

参考

https://blog.csdn.net/weixin_44131414/article/details/116749786解决了这个问题。

吴章勇 杨强著 大数据Hadoop3.X分布式处理实战