目录

- 编译安装

- 编译环境准备

- 编译Hudi

- 上传源码包

- 修改pom文件

- 新增repository加速依赖下载

- 修改依赖的组件版本

- 修改源码兼容hadoop3

- 手动安装Kafka依赖

- 1)下载jar包

- 2)install到maven本地仓库

- 解决spark模块依赖冲突

- 执行编译命令

- 编译成功

编译安装

编译环境准备

| 组件 | 版本 |

|---|---|

| Hive | 3.1.2 |

| Flink | 1.13.6,scala-2.12 |

| Spark | 3.3.3,scala-2.12 |

| Hadoop | 3.1.3 |

1)安装Maven

(1)上传apache-maven-3.6.1-bin.tar.gz到/opt/software目录,并解压更名

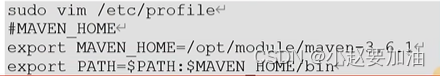

(2)添加环境变量到/etc/profile中

(3)测试安装结果

source /etc/profile

mvn -v

2)修改为阿里镜像

(1)修改setting.xml,指定为阿里仓库地址

<mirrors><mirror><id>alimaven</id><name>aliyun maven</name><url>http://maven.aliyun.com/nexus/content/groups/public/</url><mirrorOf>central</mirrorOf> </mirror></mirrors>

编译Hudi

上传源码包

将hudi-1.12.0.src.taz上传到/opt/softwore,并解压

tar -zxvf hudi-0.12.0.src.tgz -C /opt/softwore

修改pom文件

vim /opt/software/hudi-0.12.0/pom.xml

新增repository加速依赖下载

<repository><id>nexus-aliyun</id><name>nexus-aliyun</name><url>http://maven.aliyun.com/nexus/content/groups/public/</url><releases><enabled>true</enabled></releases>< snapshots><enabled>false</enabled></snapshots></repository>

修改依赖的组件版本

<hadoop.version>3.1.3</hadoop.version>

<hive.version>3.1.2</hive.version>

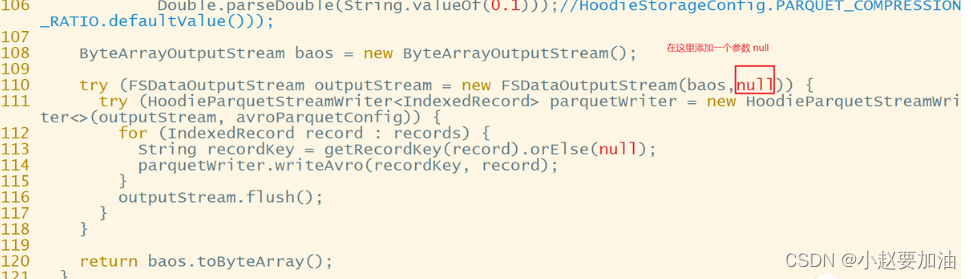

修改源码兼容hadoop3

Hudi默认依赖的hadoop2,要兼容hadoop3,除了修改版本,还需要修改如下代码

vim /opt/software/hudi-0.12.0/hudi-common/src/main/java/org/apache/hudi/common/table/log/block/HoodieParquetDataBlock.java

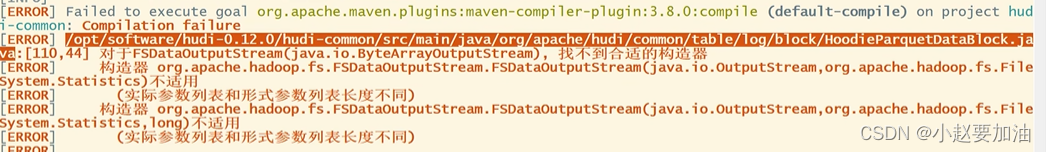

则会因为hadoop2.x和3.x版本兼容问题,报错如下:

手动安装Kafka依赖

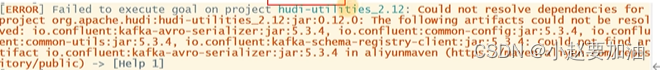

有几个kafka的依赖需要手动安装,否则编译报错如下:

1)下载jar包

通过网址下载:http://packages.confluent.io/archive/5.3/confluent-5.3.4-2.12.zip

解压后找到以下jar包,上传服务器hadoop1

-

common-config-5.3.4.jar

-

common-utils-5.3.4.jar

-

kafka-avro-serializer-5.3.4.jar

-

kafka-schema-registry-client-5.3.4.jar

2)install到maven本地仓库

mvn install:install-file -DgroupId=io.confluent -DartifactId=common-config -Dversion=5.3.4 -Dpackaging=jar -Dfile=./common-config-5.3.4.jarmvn install:install-file -DgroupId=io.confluent -DartifactId=common-utils -Dversion=5.3.4 -Dpackaging=jar -Dfile=./common-utils-5.3.4.jarmvn install:install-file -DgroupId=io.confluent -DartifactId=kafka-avro-serializer -Dversion=5.3.4 -Dpackaging=jar -Dfile=./kafka-avro-serializer-5.3.4.jarmvn install:install-file -DgroupId=io.confluent -DartifactId=kafka-schema-registry-client -Dversion=5.3.4 -Dpackaging=jar -Dfile=./kafka-schema-registry-client-5.3.4.jar解决spark模块依赖冲突

修改了Hive版本为3.1.2,其携带的jetty是0.9.3,hudi本身用的0.9.4,存在依赖冲突。

1)修改hudi-spark-bundle的pom文件,排除低版本jetty,添加hudi指定版本的jetty:

vim /opt/software/hudi-0.12.0/packaging/hudi-spark-bundle/pom.xml

在382行的位置,修改如下

<!-- Hive --><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-service</artifactId><version>${hive.version}</version><scope>${spark.bundle.hive.scope}</scope><exclusions><exclusion><artifactId>guava</artifactId><groupId>com.google.guava</groupId></exclusion><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>org.pentaho</groupId><artifactId>*</artifactId></exclusion></exclusions></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-service-rpc</artifactId><version>${hive.version}</version><scope>${spark.bundle.hive.scope}</scope></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-jdbc</artifactId><version>${hive.version}</version><scope>${spark.bundle.hive.scope}</scope><exclusions><exclusion><groupId>javax.servlet</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>javax.servlet.jsp</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion></exclusions></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-metastore</artifactId><version>${hive.version}</version><scope>${spark.bundle.hive.scope}</scope><exclusions><exclusion><groupId>javax.servlet</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>org.datanucleus</groupId><artifactId>datanucleus-core</artifactId></exclusion><exclusion><groupId>javax.servlet.jsp</groupId><artifactId>*</artifactId></exclusion><exclusion><artifactId>guava</artifactId><groupId>com.google.guava</groupId></exclusion></exclusions></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-common</artifactId><version>${hive.version}</version><scope>${spark.bundle.hive.scope}</scope><exclusions><exclusion><groupId>org.eclipse.jetty.orbit</groupId><artifactId>javax.servlet</artifactId></exclusion><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion></exclusions>

</dependency><!-- 增加hudi配置版本的jetty --><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-server</artifactId><version>${jetty.version}</version></dependency><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-util</artifactId><version>${jetty.version}</version></dependency><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-webapp</artifactId><version>${jetty.version}</version></dependency><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-http</artifactId><version>${jetty.version}</version></dependency>否则在使用spark向hudi表插入数据时,会报错如下:

java.lang.NoSuchMethodError: org.apache.hudi.org.apache.jetty.server.session.SessionHandler.setHttpOnly(Z)V2)修改hudi-utilities-bundle的pom文件,排除低版本jetty,添加hudi指定版本的jetty:

在405行的位置,修改如下

<!-- Hoodie --><dependency><groupId>org.apache.hudi</groupId><artifactId>hudi-common</artifactId><version>${project.version}</version><exclusions><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion></exclusions></dependency><dependency><groupId>org.apache.hudi</groupId><artifactId>hudi-client-common</artifactId><version>${project.version}</version><exclusions><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion></exclusions></dependency><!-- Hive --><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-service</artifactId><version>${hive.version}</version><scope>${utilities.bundle.hive.scope}</scope><exclusions><exclusion><artifactId>servlet-api</artifactId><groupId>javax.servlet</groupId></exclusion><exclusion><artifactId>guava</artifactId><groupId>com.google.guava</groupId></exclusion><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>org.pentaho</groupId><artifactId>*</artifactId></exclusion></exclusions></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-service-rpc</artifactId><version>${hive.version}</version><scope>${utilities.bundle.hive.scope}</scope></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-jdbc</artifactId><version>${hive.version}</version><scope>${utilities.bundle.hive.scope}</scope><exclusions><exclusion><groupId>javax.servlet</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>javax.servlet.jsp</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion></exclusions></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-metastore</artifactId><version>${hive.version}</version><scope>${utilities.bundle.hive.scope}</scope><exclusions><exclusion><groupId>javax.servlet</groupId><artifactId>*</artifactId></exclusion><exclusion><groupId>org.datanucleus</groupId><artifactId>datanucleus-core</artifactId></exclusion><exclusion><groupId>javax.servlet.jsp</groupId><artifactId>*</artifactId></exclusion><exclusion><artifactId>guava</artifactId><groupId>com.google.guava</groupId></exclusion></exclusions></dependency><dependency><groupId>${hive.groupid}</groupId><artifactId>hive-common</artifactId><version>${hive.version}</version><scope>${utilities.bundle.hive.scope}</scope><exclusions><exclusion><groupId>org.eclipse.jetty.orbit</groupId><artifactId>javax.servlet</artifactId></exclusion><exclusion><groupId>org.eclipse.jetty</groupId><artifactId>*</artifactId></exclusion></exclusions>

</dependency><!-- 增加hudi配置版本的jetty --><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-server</artifactId><version>${jetty.version}</version></dependency><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-util</artifactId><version>${jetty.version}</version></dependency><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-webapp</artifactId><version>${jetty.version}</version></dependency><dependency><groupId>org.eclipse.jetty</groupId><artifactId>jetty-http</artifactId><version>${jetty.version}</version></dependency>否则在使用DeltaStreamer工具向hudi表插入数据时,也会报Jetty的错误

执行编译命令

mvn clean package -DskipTests -Dspark3.2 -Dflink1.13 -Dscala-2.12 -Dhadoop.version=3.1.3 -Pflink-bundle-shade-hive3

编译成功

编译成功后,进入hudi-cli说明成功:

hudi-cli/hudi-cli.sh